November 4, 2025

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

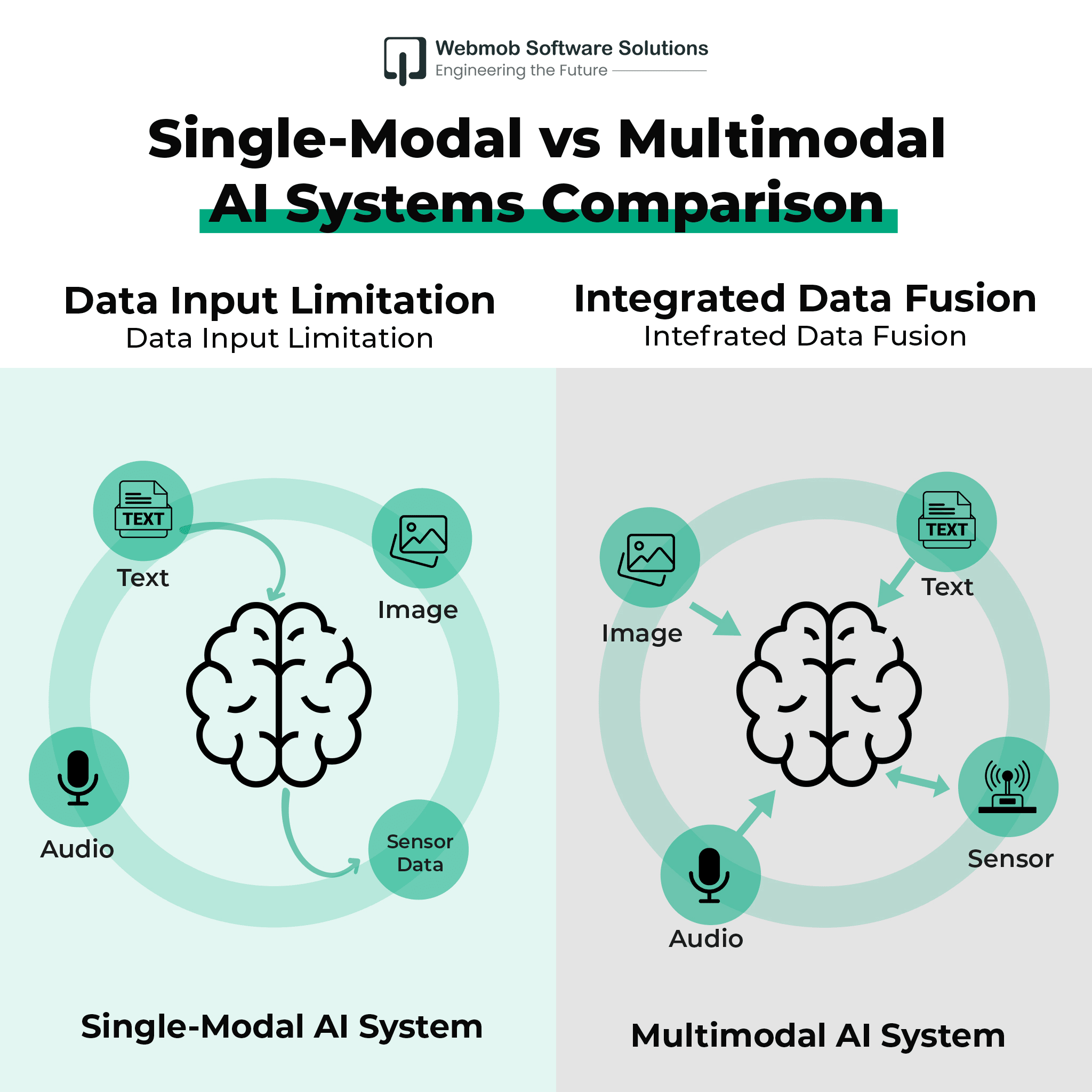

After working with dozens of AI implementations, we've noticed something interesting: companies that stick with traditional single-modal AI systems are hitting walls they didn't expect. You know the scenario - your image recognition works perfectly, your text analysis is spot-on, but when you need both working together? That's where things get messy.

Multimodal AI right now is becoming a necessity because real-world problems don't come in neat, single-data-type packages. When your customer sends an email with a photo of a damaged product, complaining about poor service, you need AI that can process the image quality, understand the emotional tone of the text, and even analyze voice recordings from previous calls. Single-modal systems? They're like having a team of specialists who refuse to talk to each other.

Traditional AI systems operate like specialists - they're incredibly good at one thing but struggle when faced with diverse data types. Multimodal AI models break down these barriers by creating systems that process information in a manner more similar to how humans do. When you walk into a room, you don't just see or hear - you simultaneously process visual cues, sounds, smells, and contextual information to understand your environment completely.

The technical foundation of multimodal machine learning lies in sophisticated fusion mechanisms that map different data types into a shared computational space. These systems use specialized encoder networks for each data type - whether text, images, audio, or sensor data and then employ advanced transformer architectures and attention mechanisms to identify meaningful connections across modalities.

What makes this particularly powerful is how different data types enhance each other. Visual information can clarify ambiguous text, audio tone can modify the interpretation of written words, and contextual data can improve the accuracy of image recognition. This creates a synergistic effect where the combined understanding exceeds the sum of individual parts.

The multimodal generative AI market has moved from experimental labs to commercial reality with remarkable speed. Current market valuations show growth from $1.6 billion in 2024 to a projected $42.38 billion by 2034, representing a compound annual growth rate of 36.92%. This is driven by real applications solving complex business problems across multiple industries.

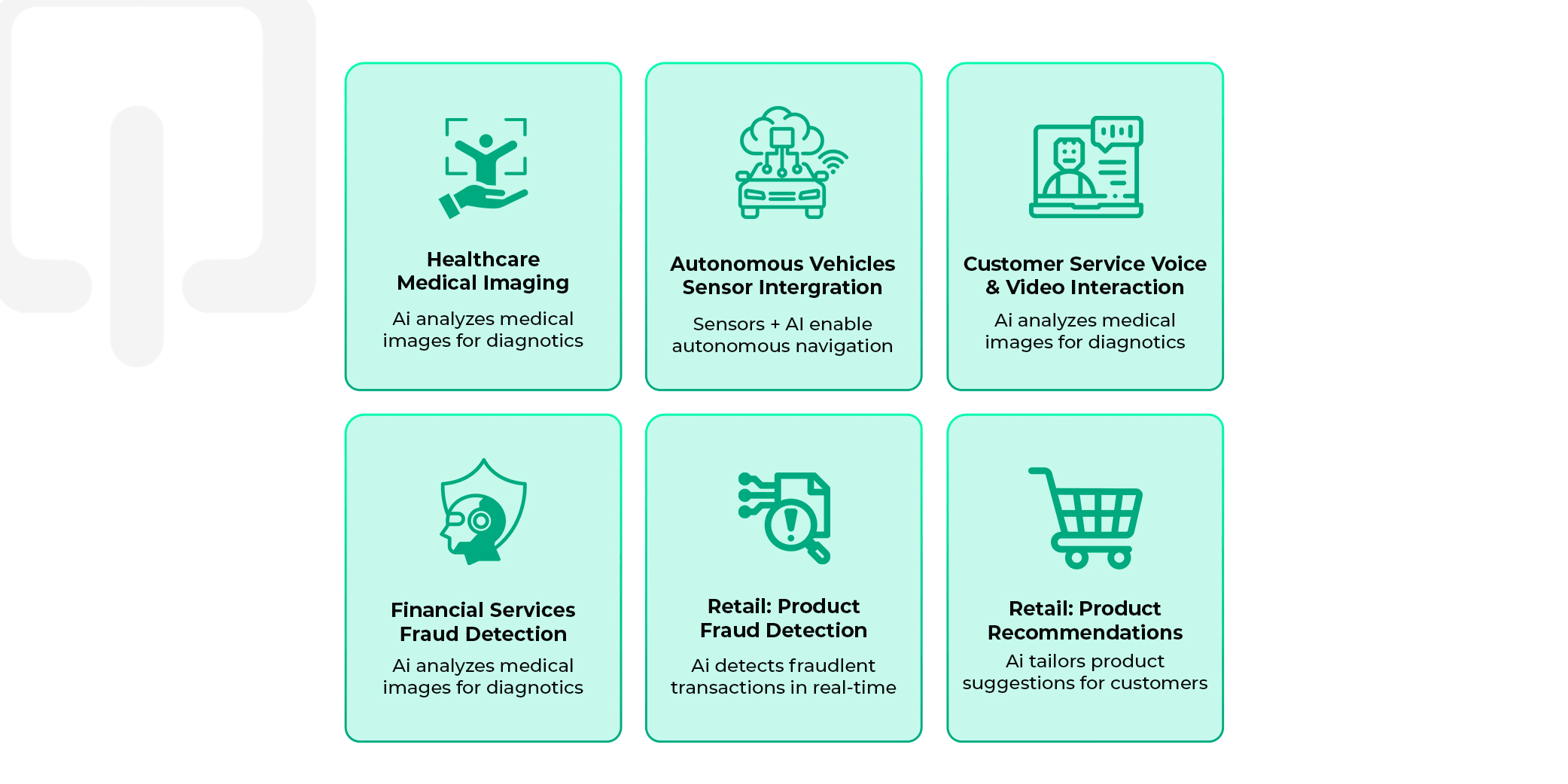

Healthcare organizations are combining medical imaging with patient records and clinical notes to improve diagnostic accuracy. Financial institutions integrate transaction patterns with behavioral analysis and document processing for enhanced fraud detection. Retail companies merge visual preferences with purchase history and browsing patterns to create truly personalized shopping experiences.

Multimodal AI is transforming medical diagnosis and treatment by combining diverse data sources. Radiologists now use systems that analyze medical images alongside patient history, laboratory results, and clinical notes to identify patterns invisible to single-modal approaches. These systems have demonstrated improved accuracy in detecting early-stage cancers and predicting patient outcomes by considering the complete clinical picture.

Drug discovery processes are accelerating through multimodal approaches that combine molecular structures, clinical trial data, and patient genomics. This comprehensive analysis helps pharmaceutical companies identify promising compounds faster and predict potential side effects more accurately.

The automotive industry relies heavily on multimodal AI agents for autonomous vehicle development. These systems simultaneously process camera feeds, LiDAR data, radar inputs, GPS coordinates, and real-time traffic information to make split-second decisions. The integration of multiple sensor types creates redundancy that significantly improves safety - if one sensor fails or provides unclear data, others can compensate.

Sensor fusion technology combining LiDAR, cameras, and radar demonstrates how autonomous vehicles can reduce error rates by up to 90% and cut accident rates by up to 80%. Advanced driver assistance systems now use multimodal approaches to monitor both the vehicle's environment and the driver's state, combining facial recognition, voice analysis, and behavioral patterns to detect fatigue or distraction.

Advanced driver assistance systems now use multimodal approaches to monitor both the vehicle's environment and the driver's state, combining facial recognition, voice analysis, and behavioral patterns to detect fatigue or distraction.

Multimodal generative AI is revolutionizing customer service by enabling more empathetic and contextually aware interactions. Modern customer support systems analyze voice tone, facial expressions (in video calls), and text content simultaneously to gauge customer emotion and urgency. This allows for more appropriate responses and better issue resolution.

Retail chatbots now process product images uploaded by customers alongside their text descriptions to provide more accurate recommendations and support. This multimodal approach reduces frustration and improves customer satisfaction significantly.

Banks and financial institutions use multimodal AI models to combine transaction patterns, document analysis, and behavioral biometrics for comprehensive fraud detection. These systems can identify suspicious activities by analyzing spending patterns alongside location data, device characteristics, and even typing rhythms.

Investment firms employ multimodal approaches to analyze market sentiment by combining news text, social media posts, audio from earnings calls, and visual data from financial charts to make more informed trading decisions.

Multimodal AI development requires 2-4 times more computational resources than traditional single-modal systems. Processing multiple data streams simultaneously demands sophisticated hardware architectures and optimized algorithms. However, advances in specialized AI chips and cloud computing infrastructure are making these requirements more manageable for organizations of various sizes.

Edge computing solutions are emerging to address real-time processing needs, bringing multimodal capabilities to mobile devices and IoT systems without requiring constant cloud connectivity.

The complexity of combining different data types with varying structures, quality levels, and temporal alignment creates significant technical challenges. Multimodal artificial intelligence systems must handle situations where some data streams are missing, corrupted, or out of sync.

Advanced preprocessing techniques and robust fusion algorithms are addressing these issues, creating systems that can maintain performance even when some input modalities are compromised.

Processing multiple data types simultaneously raises important privacy concerns. Multimodal AI systems have the potential to access more comprehensive personal information than ever before, necessitating careful consideration of data protection and user consent.

Organizations implementing these systems must establish clear guidelines for data usage, storage, and sharing while ensuring compliance with evolving privacy regulations.

Successful multimodal AI development begins with selecting appropriate architectural approaches. Transformer-based models have shown particular promise due to their ability to handle variable-length sequences and cross-modal attention mechanisms. However, the specific architecture choice depends on the use case, data types involved, and performance requirements.

Early fusion approaches combine raw data from different modalities before processing, while late fusion methods process each modality separately before combining results. Hybrid approaches that combine both strategies often provide the best performance for complex applications.

The quality of multimodal systems depends heavily on proper data preprocessing and alignment. Different modalities often operate at different temporal scales - audio might be sampled at millisecond intervals while text updates occur over seconds or minutes. Effective preprocessing ensures all data streams are properly synchronized and normalized.

Data augmentation techniques specific to multimodal scenarios help improve model robustness and reduce overfitting by creating synthetic training examples that maintain cross-modal relationships.

Traditional AI evaluation metrics often fall short when assessing multimodal AI models. New evaluation frameworks consider cross-modal consistency, robustness to missing modalities, and the system's ability to leverage complementary information from different data types.

Comprehensive testing protocols must account for various failure modes unique to multimodal systems, including modality conflicts and temporal misalignment issues.

Given the complexity of multimodal AI implementation, many organizations are partnering with specialized providers offering Generative AI Development Services. These partnerships provide access to expertise in areas like architecture design, data preprocessing, model training, and deployment optimization.

A qualified Generative AI Development Company brings experience across multiple industries and use cases, helping organizations avoid common pitfalls and accelerate time-to-market. These partnerships are particularly valuable for companies that lack extensive AI expertise but have clear business use cases for multimodal capabilities.

Multimodal AI company partnerships often include ongoing support for model updates, performance monitoring, and scaling as business needs evolve. This collaborative approach allows organizations to focus on their core business while leveraging cutting-edge AI capabilities.

The next generation of multimodal AI agents will operate with greater autonomy, making decisions and taking actions based on comprehensive environmental understanding. These agents will combine perception, reasoning, and action capabilities across multiple modalities to perform complex tasks with minimal human intervention.

Research into embodied AI systems that combine multimodal perception with physical interaction capabilities holds promise for applications in robotics, smart homes, and industrial automation.

Multimodal AI development is increasingly focusing on edge deployment scenarios where processing must occur locally due to latency, bandwidth, or privacy constraints. Optimized model architectures and specialized hardware are making sophisticated multimodal capabilities available on mobile devices and embedded systems.

Advanced multimodal generative AI systems are developing the ability to generate content in one modality based on input from another. These systems can create images from text descriptions, generate audio from visual content, or produce comprehensive reports combining multiple data sources.

Organizations considering multimodal artificial intelligence implementation should begin with a clear use case definition and success metrics. The complexity of these systems requires careful project planning and stakeholder alignment to ensure successful deployment.

Pilot projects focusing on specific business problems allow organizations to gain experience with multimodal approaches while demonstrating value before larger investments.

Successful multimodal machine learning implementation requires a robust infrastructure capable of handling diverse data types and computational demands. Cloud-based solutions often provide the flexibility and scalability needed for initial deployments, while edge computing becomes essential for real-time applications.

Building internal capabilities for multimodal AI development requires cross-functional teams with expertise in multiple domains. Data scientists, engineers, and domain experts must collaborate closely to ensure successful implementation and ongoing optimization.

Multimodal AI represents more than incremental improvement; it's a fundamental shift toward AI systems that understand and interact with the world in a manner more akin to humans. Organizations that begin implementing these capabilities today position themselves for competitive advantages that traditional single-modal approaches cannot match.

The convergence of improved architectures, specialized development services, and growing industry adoption suggests multimodal approaches will become the standard for sophisticated AI applications. As computational costs decrease and tools become more accessible, multimodal artificial intelligence will transform from cutting-edge technology to an essential business capability.

Based on our experience working with dozens of implementations, the question arises: how quickly can organizations adapt to leverage these powerful capabilities? Those who start now, with proper planning and expert guidance from experienced AI development partners, will lead the transformation rather than struggle to catch up later.

Copyright © 2026 Webmob Software Solutions