January 29, 2026

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

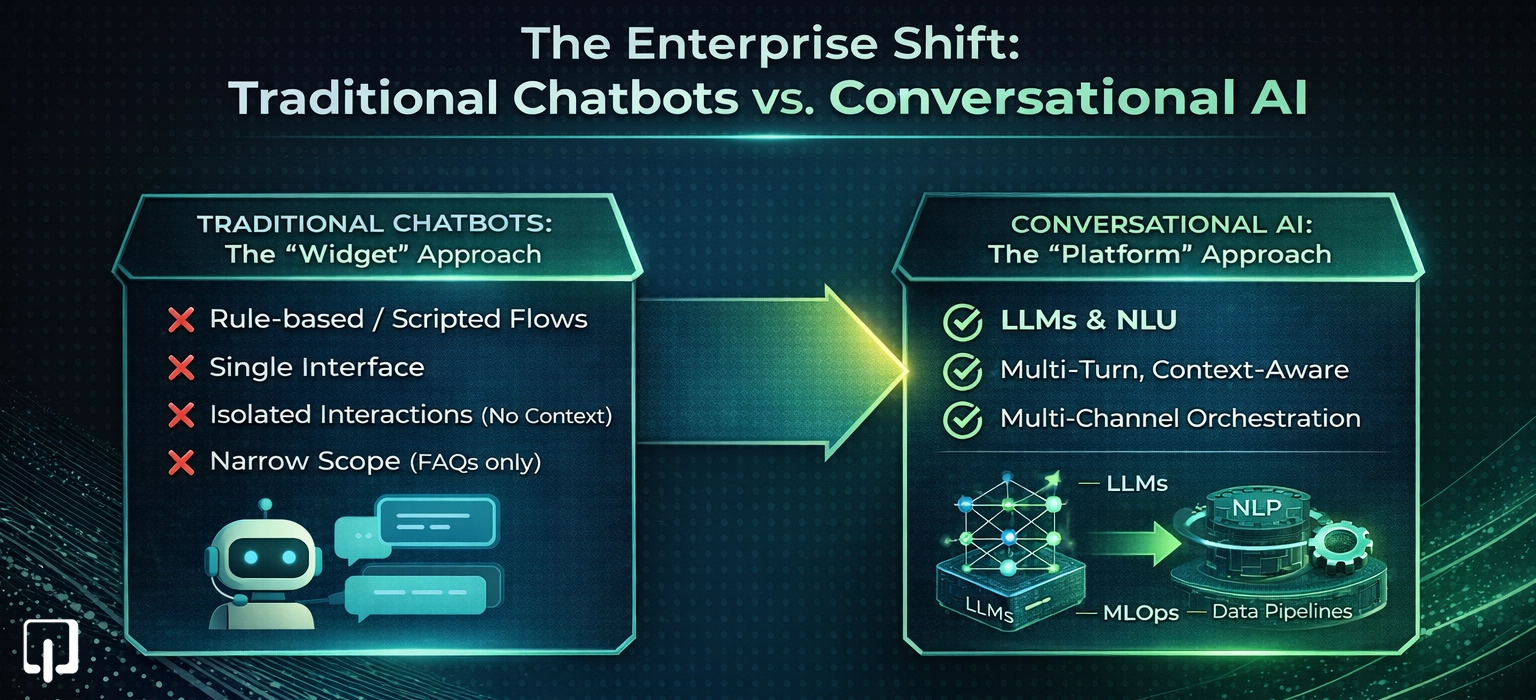

Conversational AI represents a comprehensive technology stack that combines Large Language Models (LLMs), Natural Language Processing (NLP), orchestration capabilities, and MLOps frameworks to deliver multi-turn, context-aware automation across multiple channels. In contrast, traditional chatbotss serve as single-interface applications within this broader stack, typically relying on rule-based or FAQ-driven mechanisms with significantly narrower operational scope.

The distinction between these technologies is critical for enterprises planning their AI implementation strategy. A conversational AI development company must approach these systems as complete software and data platforms rather than simple widgets. This involves careful problem scoping, strategic architecture decisions, comprehensive model strategy, robust MLOps implementation, domain-specific data pipelines, stringent security compliance measures, and continuous optimization protocols.

Traditional Chatbots function as software applications that simulate conversation through predefined flows or intent-based interactions. Many legacy systems rely on menu-driven or keyword-based frameworks that cannot generalize beyond their scripted conversation paths. These systems treat each user message in isolation, lacking the contextual awareness necessary for complex interactions.

Conversational AI Systems deploy an end-to-end stack utilizing NLP, machine learning, and LLMs to comprehend user intent, maintain conversation context, execute tool and API calls, and continuously learn from user interactions. These systems power various interfaces including chatbots, voicebots, and virtual assistants across multiple channels.

Enterprise AI Chatbots integrate conversational AI applications with internal business systems such as CRM platforms, core banking infrastructure, Hospital Information Systems (HIS), Learning Management Systems (LMS), and property management systems. These implementations are governed through MLOps processes and security protocols, optimized for key performance indicators including Customer Satisfaction (CSAT), First Contact Resolution (FCR), Average Handle Time (AHT), and conversion metrics.

The AI in education sector demonstrates substantial growth potential, with market valuations estimated to expand from approximately USD 5.88 billion in 2024 to about USD 32.27 billion by 2030, representing a Compound Annual Growth Rate (CAGR) of 31.2%. This growth is primarily driven by increased demand for personalized learning solutions and administrative automation.

The interactive and AI-driven learning market segment forecasts approximately 7.2% growth from 2025 through 2032. North America currently maintains a 41.6% market share, while the Asia-Pacific region demonstrates accelerated growth patterns. Industry analysis indicates conversational AI deployment in banking and healthcare sectors has transitioned from experimental "nice-to-have" features to core infrastructure components for customer engagement and operational efficiency.

The following comparison table illustrates fundamental differences between traditional chatbot implementations and modern conversational AI systems:

This technical distinction positions conversational AI development companies and AI development firms to deliver comprehensive stack solutions focused on measurable business outcomes rather than simple scripted interactions.

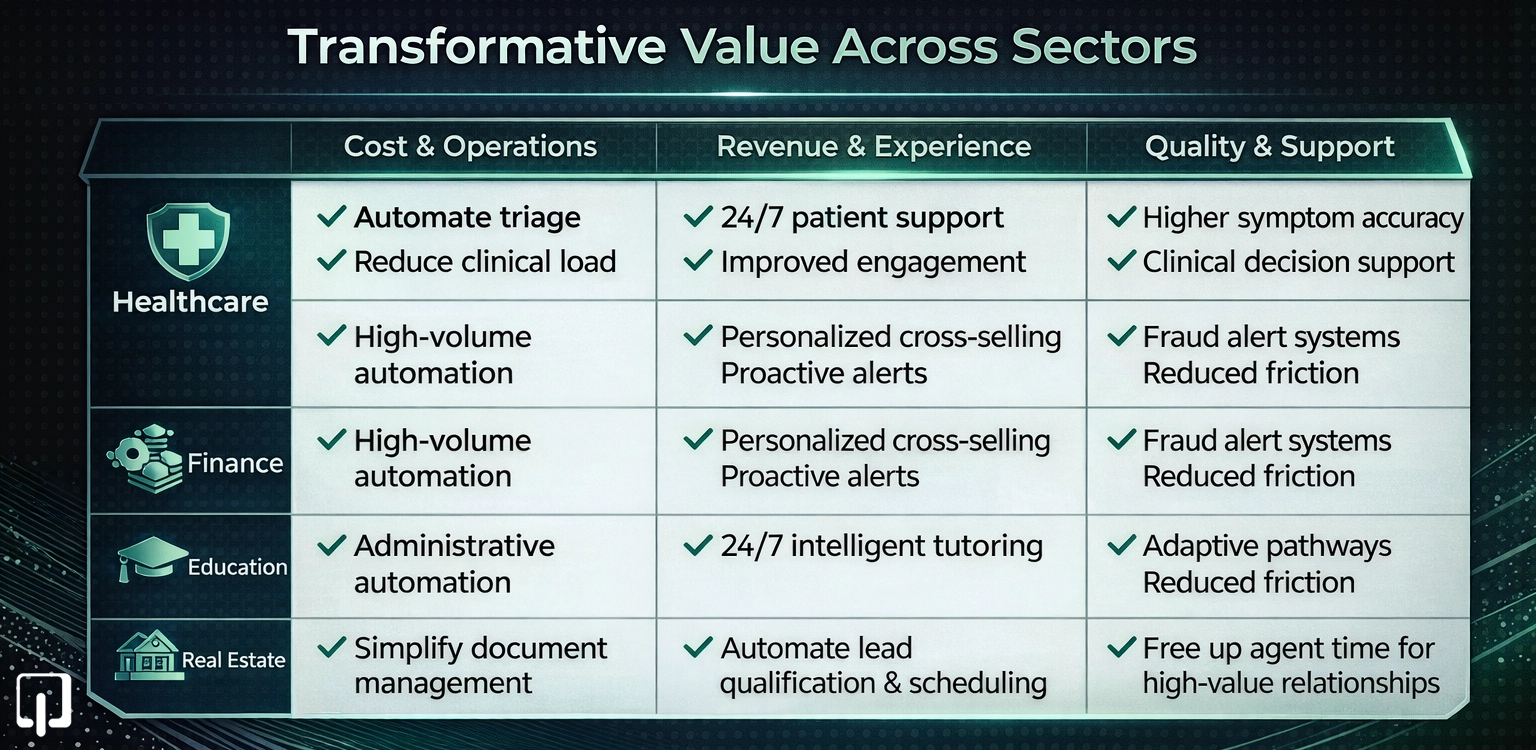

Banks and financial institutions implement conversational AI to automate high-volume customer interactions, reducing contact center operational loads and accelerating issue resolution while maintaining regulatory compliance standards. Healthcare organizations deploy AI assistants for patient triage, frequently asked question responses, and administrative task automation, which frees clinical staff time and reduces patient wait times.

Banking sector implementations support personalized cross-selling and upselling opportunities alongside proactive customer alerts, improving customer loyalty metrics and product penetration rates. Educational institutions utilize AI tutors and support systems to enable continuous 24/7 student assistance and adaptive learning pathways, significantly improving student satisfaction scores and retention rates.

Healthcare research studies report measurable improvements in symptom tracking accuracy, patient engagement levels, and clinical decision support when conversational agents operate under clinician oversight. Randomized controlled trials featuring LLM-based medical agents have demonstrated high patient satisfaction rates. Explainable AI designs incorporating hybrid human-in-the-loop architectures reduce safety risks, minimize bias concerns, and improve overall system trust.

Symptom checker applications, triage bots, and chronic disease companion systems guide patients through comprehensive history-taking processes, risk flag identification, and next-step recommendations. These implementations reduce unnecessary clinical visits while supporting remote care delivery models. Hybrid chatbot architectures combining AI automation with human handoff capabilities support chronic disease management and mental health interventions through reminder systems, continuous monitoring, and defined escalation pathways.

LLM-based conversational health agents assist with patient education, question answering, and clinical workflow tasks including documentation, case summarization, medical coding, and order suggestions within supervised clinical settings. Controlled deployment studies demonstrate high patient satisfaction and acceptance rates when physicians maintain supervisory oversight roles.

Banks deploy AI agents throughout customer onboarding processes, Know Your Customer (KYC) verification, account inquiries, card management workflows, loan application journeys, and fraud alert systems. These implementations reduce customer friction and manual processing requirements while improving overall convenience. Conversational interfaces additionally support internal use cases including policy lookup, compliance question answering, and operations support functions.

Financial institutions increasingly utilize AI, including conversational interfaces, for intelligent scheduling, risk detection, and automated documentation generation using LLM and NLP technologies in project management contexts.

Conversational AI frameworks support career counseling applications that deliver personalized academic guidance based on student grades, performance patterns, and stated preferences. These systems demonstrate the practical potential of open-source conversational AI stacks. The technology enables continuous helpdesk availability, intelligent tutoring capabilities, grading assistance, and language learning support. The overall AI in education market maintains growth rates exceeding 30% CAGR.

Conversational AI simplifies property search processes, mortgage pre-qualification workflows, document management, and customer communication systems within real estate portals and brokerage platforms. Real estate agents utilize chatbots to manage property inquiries, schedule site visits, and respond to listing questions, freeing valuable time for high-value client relationship building.

Organizations must identify priority use cases such as Level 1 support, loan FAQ responses, or medical triage. Define target communication channels and establish success metrics including deflection rates, CSAT scores, FCR percentages, conversion rates, and other relevant KPIs. Document compliance requirements and safety constraints including HIPAA and GDPR regulations, banking secrecy standards, Protected Health Information (PHI) and Personally Identifiable Information (PII) handling protocols, escalation rules, and supported languages and regional requirements.

Conduct comprehensive inventory of data sources including knowledge bases, policy documentation, FAQ repositories, support tickets, call transcripts, and system data from EMR, EHR, LMS, and core banking platforms. Clean, annotate, and structure collected data. Define intents and entities for NLU implementations, or establish retrieval corpora and tool configurations for LLM-centric architectures.

Common architectural patterns include:

Select appropriate foundation models, evaluating open-source versus API-based options based on latency requirements, cost constraints, domain adaptation needs, and data residency compliance requirements.

Conversation design should commence with limited scope and iterate progressively. Define conversation flows, establish appropriate tone, specify escalation triggers, design error recovery mechanisms, and implement fallback behaviors. Deploy comprehensive safety measures including content filters, domain-specific constraints, appropriate disclaimers, clinician or human-in-the-loop oversight for sensitive domains (healthcare and finance), and counter-anthropomorphic user interface elements where necessary.

Establish connections to CRM platforms, ticketing systems, EMR and EHR databases, core banking infrastructure, property management systems, and analytics platforms to enable actionable responses beyond simple information retrieval. Deploy solutions across web properties, mobile applications, messaging platforms, Interactive Voice Response (IVR) systems, and contact center tooling while maintaining consistent user identity and conversation context.

Construct a Minimum Viable Product (MVP) or Proof of Concept (PoC) early in the development cycle. Conduct testing with internal user groups and execute controlled pilot programs to validate intent coverage and measure established KPIs. Utilize replay testing methodologies and A/B testing frameworks to evaluate model updates against historical conversation data and live traffic samples.

Implement MLOps frameworks to manage model versions, data pipeline orchestration, training job execution, and deployment workflows. Continuously monitor system drift, quality metrics, and latency performance. Execute ongoing retraining or fine-tuning procedures with newly collected data, incorporate user feedback mechanisms, and refine conversation design elements over time.

Maintain comprehensive tracking of model versions, prompt configurations, dataset iterations, and system configurations. Ensure rollback capabilities exist to safely revert changes if performance regressions occur during updates.

Implement separation of PII and PHI from training corpora where regulatory requirements mandate. Apply appropriate anonymization techniques and access control mechanisms. Log only essential information required for system improvement while maintaining compliance standards.

Combine offline evaluation metrics (accuracy measurements, task success rates) with online performance indicators (CSAT scores, containment rates, escalation frequencies, revenue impact, safety incident tracking). Implement replay test harnesses and automated test suites for regression testing when modifying models or prompt configurations.

Healthcare, mental health, and financial applications require human review or supervision capabilities with clear user disclaimers. Design AI systems as augmentation layers rather than complete replacements for clinical professionals or financial advisors.

Plan comprehensive fallback strategies if LLM API services fail, including local model deployment or rule-based flow alternatives. Implement rate-limiting protections and establish cost and latency control mechanisms.

Custom AI chatbot projects delivered through development agencies or specialized firms typically require end-to-end investments spanning design, integration, and deployment phases. Projects generally range from approximately USD 15,000 to upward of USD 300,000 depending on complexity factors, data requirements, and integration scope.

Multiple industry pricing guides published in 2025 indicate custom LLM-powered enterprise chatbot implementations often fall between approximately USD 75,000 and USD 500,000 or higher. Highly regulated medical and financial systems frequently reach elevated budget ranges due to extensive compliance requirements and deep integration complexity.

Enterprise organizations commonly allocate approximately 15-20% of initial development costs annually for maintenance activities and system improvements. These ongoing investments cover new intent development, connector implementation, security updates, and continuous optimization efforts.

Subscription-based and no-code platform options can start below USD 20-50 monthly for basic chatbot functionality, scaling to several thousand dollars monthly for enterprise-ready generative AI assistant implementations.

Total Cost of Ownership (TCO) is fundamentally driven by use case scope, supported channels, required integrations, compliance obligations, and anticipated traffic volumes.

Complex enterprise environments require AI engineers with comprehensive understanding of both machine learning and LLM internals alongside systems integration expertise covering APIs, data warehouses, and legacy system architectures. These professionals must navigate regulatory constraints and user experience requirements specific to sensitive industries.

Experienced development teams reduce organizational risk related to ethical considerations, safety protocols, and reputational concerns by embedding appropriate safeguards and governance frameworks from project inception. A conversational AI development company brings strategic value through:

Healthcare organizations deploy symptom checkers and chronic disease management companions that provide 24/7 patient support while reducing clinical workload. Financial institutions utilize onboarding assistants that streamline KYC processes and account setup workflows while maintaining compliance standards. Educational platforms implement intelligent tutoring systems offering personalized learning pathways and continuous academic support. Real estate firms leverage property search assistants that qualify leads and schedule viewings automatically.

Organizations seeking to implement enterprise-grade conversational AI benefit from consulting partnerships that provide comprehensive guidance throughout the implementation lifecycle. Professional consulting services address architecture selection, model strategy development, MLOps framework design, security and compliance alignment, and continuous optimization methodologies. These partnerships ensure organizations avoid common implementation pitfalls while accelerating time-to-value.

Building enterprise-grade conversational AI requires a strategic approach combining technical expertise, industry knowledge, and operational discipline. Organizations must evaluate the distinction between traditional chatbots and comprehensive conversational AI systems, understanding that successful implementations demand treating these solutions as complete software platforms rather than simple widgets. Investment considerations span initial development costs ranging from USD 15,000 to USD 500,000 or higher, alongside ongoing operational expenses of 15-20% annually. Success depends on careful use case selection, appropriate architecture choices, robust MLOps practices, and continuous optimization. Organizations that hire AI developers and partner with experienced conversational AI development companies position themselves to realize substantial benefits across cost reduction, revenue enhancement, and customer experience improvement while maintaining necessary compliance and safety standards.

Conversational AI utilizes comprehensive NLP, machine learning, and LLM technologies to maintain context across conversation turns and continuously learn from interactions. Traditional chatbots rely on predefined flows and keyword matching, treating each message independently without contextual awareness.

Custom conversational AI chatbot development typically ranges from approximately USD 15,000 to USD 300,000 for standard implementations. Highly regulated enterprise systems in healthcare and finance sectors can reach USD 75,000 to USD 500,000 or higher due to compliance requirements and integration complexity.

Healthcare conversational AI provides patient triage capabilities, reduces clinical workload through automated FAQ responses, enables 24/7 patient support, improves symptom tracking accuracy, and supports chronic disease management with proper clinical oversight.

Development timelines vary based on scope complexity. A basic MVP can be developed in 8-12 weeks, while comprehensive enterprise implementations with multiple integrations, compliance requirements, and MLOps frameworks typically require 4-6 months or longer.

Healthcare, banking and fintech, education, and real estate sectors demonstrate substantial benefits. Healthcare gains operational efficiency and patient engagement improvements. Banking automates customer service and compliance workflows. Education provides personalized tutoring. Real estate streamlines lead management and property journeys.

Essential MLOps practices include maintaining model version control, implementing comprehensive data governance and privacy protection, combining offline and online evaluation metrics, establishing human-in-the-loop safety protocols for sensitive domains, and planning operational resilience with appropriate failover mechanisms.

Complex enterprise requirements involving sensitive data, regulatory compliance, deep system integrations, and custom workflows benefit from hiring experienced AI developers. No-code platforms suit simpler use cases with standard requirements and limited integration needs.

RAG enables conversational AI systems to retrieve relevant information from proprietary knowledge bases and documentation during conversations, allowing LLMs to provide accurate, domain-specific responses grounded in organizational data rather than relying solely on pre-trained knowledge.

Safety measures include implementing content filters, establishing domain-specific constraints, requiring human oversight for sensitive decisions, maintaining clear disclaimers about AI limitations, applying data anonymization, and designing systems as augmentation tools rather than complete replacements for professionals.

Organizations typically allocate 15-20% of initial development costs annually for ongoing maintenance, including new intent development, system updates, security patches, performance optimization, data pipeline maintenance, and continuous model improvement based on user feedback and interaction data.

Copyright © 2025 Webmob Software Solutions