February 11, 2026

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

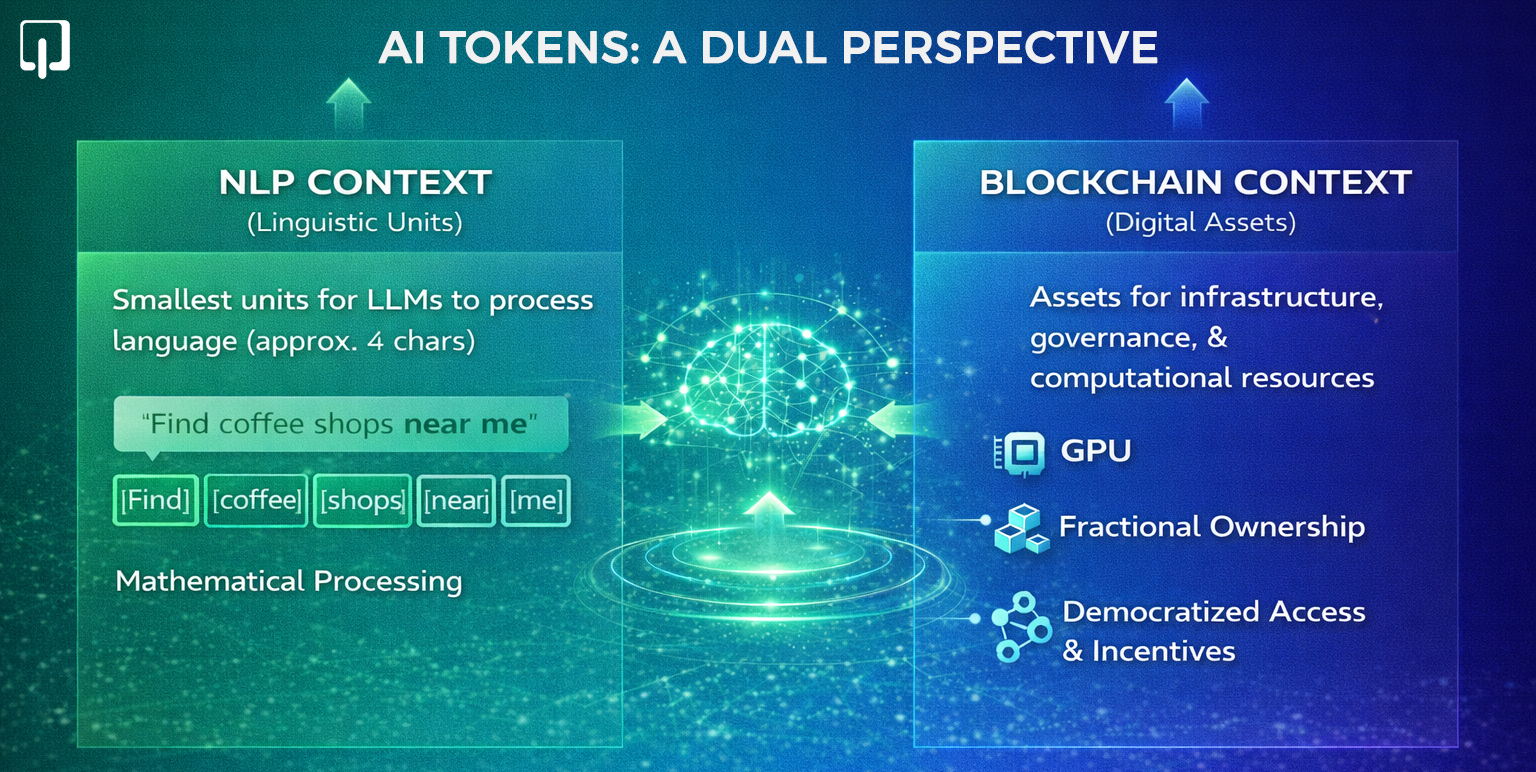

AI tokens represent two interconnected technological domains that are reshaping digital infrastructure in 2026. In Natural Language Processing (NLP), AI tokens function as the smallest linguistic units that enable Large Language Models (LLMs) like ChatGPT and Microsoft Copilot to process and generate human language. Simultaneously, blockchain-based AI tokens serve as digital assets that democratize access to artificial intelligence infrastructure, governance, and computational resources.

Understanding how AI tokens work requires examining both dimensions. In NLP contexts, one token typically represents approximately four characters in English text or roughly three-quarters of a word. For instance, "Find coffee shops near me" tokenizes into five distinct units that AI systems process mathematically. The phrase "AI is revolutionizing market research" comprised eleven tokens in GPT-3 but only eight tokens in GPT-4o, demonstrating continuous efficiency improvements in tokenization algorithms.

In blockchain environments, AI tokens enable fractional ownership of GPU infrastructure, facilitate governance through decentralized voting, and incentivize participation in distributed AI networks. The global GPU market is projected to grow from $65.27 billion in 2024 to $274.21 billion by 2029, underscoring the need for AI tokenization to scale computational infrastructure.

The tokenization process transforms continuous text into discrete, manageable units through four systematic steps: normalization (converting text to standardized lowercase forms), decomposition (breaking text into individual tokens), numerical assignment (creating unique token IDs), and pattern recognition (analyzing relationships between tokens for probabilistic predictions).

AI token development employs multiple tokenization approaches, each optimized for specific applications and linguistic characteristics. The selection of appropriate tokenization methods directly impacts model performance, computational efficiency, and the capability to handle diverse language structures.

Subword tokenization with Byte-Pair Encoding has emerged as particularly important for modern AI token development. BPE effectively handles out-of-vocabulary words by breaking them into recognizable subword units, allowing models to understand and generate vastly wider ranges of terms including rare or entirely novel words. This capability proves essential for technical documentation, specialized domains, and multilingual applications.

Different LLMs implement varying context windows that determine maximum token capacity. Llama 3 supports 8,000 tokens suitable for article summaries and brief conversations. GPT-3.5-turbo handles 16,000 tokens for extended dialogues and document analysis. GPT-4 processes 128,000 tokens enabling complex legal reviews and lengthy code generation. Claude-3 manages 200,000 tokens for comprehensive book analysis and detailed manual processing.

Blockchain-based AI tokens represent the convergence of artificial intelligence infrastructure and decentralized finance systems. These digital assets enable businesses to establish presence in crypto ecosystems while democratizing access to high-performance computational resources. AI token development on blockchain platforms serves three primary functions: facilitating financial transactions for AI services, enabling protocol governance through token holder voting, and incentivizing user participation by rewarding contributors of data and computational power.

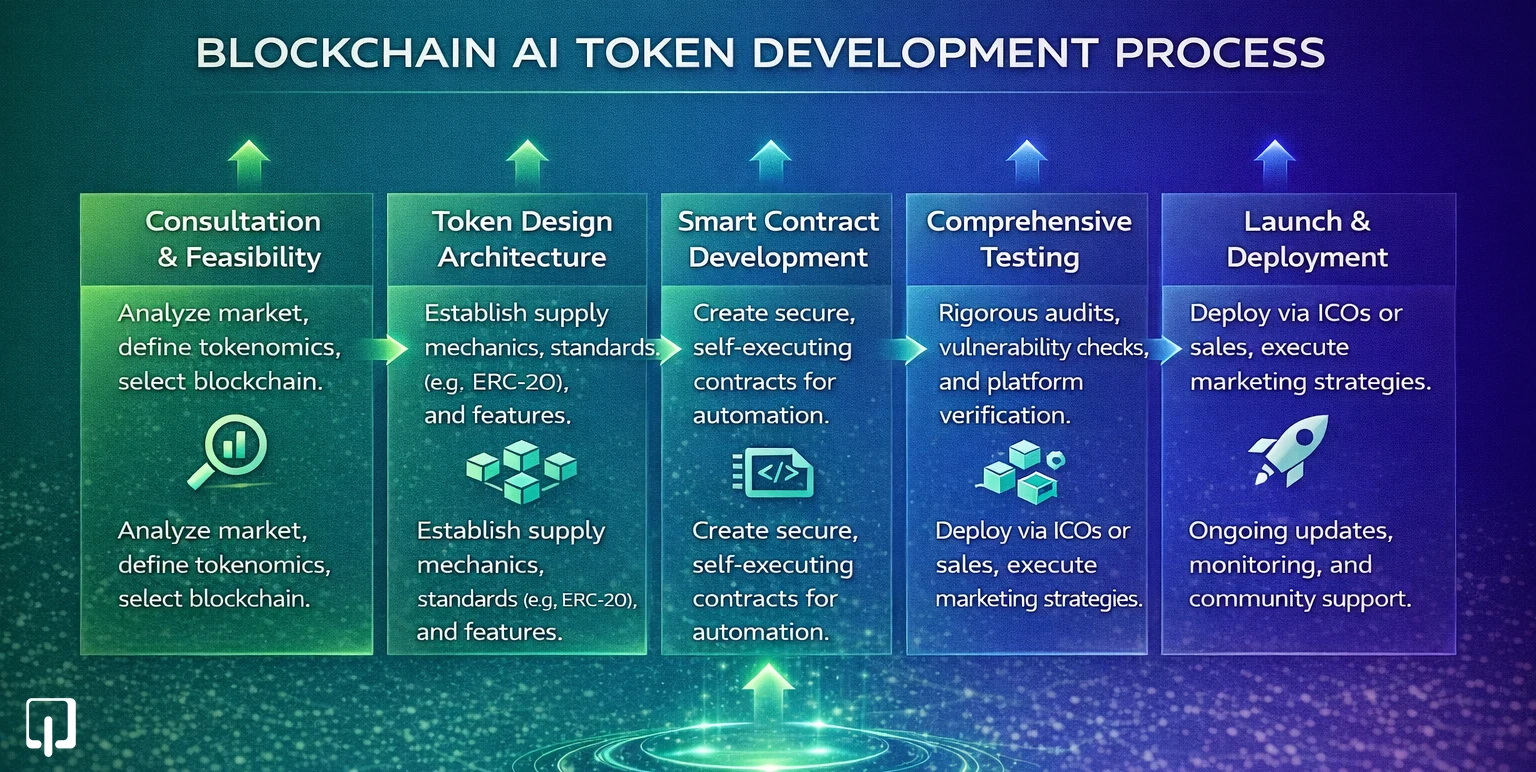

The AI token development process follows systematic stages from conception through post-launch optimization:

Development begins with strategic blockchain consulting where teams analyze market trends, assess technical requirements, and evaluate project viability. Experts define token economics, identify target audiences, select optimal blockchains, and establish competitive positioning strategies.

Teams establish core token architecture including tokenomics (supply mechanics, distribution schedules), implementation standards (ERC-20 for Ethereum, BEP-20 for Binance Smart Chain, SPL for Solana), and feature specifications aligned with project goals.

Developers create self-executing smart contracts that automate token operations, implement governance mechanisms, and enforce compliance rules. These contracts must demonstrate robustness, security, and efficiency to handle intended functions reliably.

Tokens undergo rigorous testing to identify vulnerabilities, verify functionality, and ensure blockchain compatibility. This phase includes security audits, multi-scenario smart contract testing, and platform verification.

Tokens deploy to blockchain through initial coin offerings (ICOs), token sales, or genesis block creation. Teams execute comprehensive marketing strategies to maximize visibility, drive engagement, and accelerate initial adoption.

Ongoing maintenance includes regular updates, performance monitoring, community support, and optimization based on market behavior and user feedback.

This framework addresses critical challenges in accessing capital for infrastructure expansion, particularly relevant as AI computational demands continue escalating through 2026.

AI token development cost varies significantly based on project complexity, customization requirements, and implementation scope. Organizations must evaluate whether custom development or pre-built solutions better serve their strategic objectives and resource constraints.

Custom AI token development services provide complete architectural control, unique feature differentiation, and optimization for specific use cases. Organizations gain the ability to incorporate proprietary AI models, protect intellectual property, and maintain strategic flexibility. Leading development teams now guarantee MVP delivery within 90 days through proven methodologies and experienced talent pools.

Pre-built solutions offer faster time-to-market (weeks versus months), lower upfront costs, proven security architecture, and reduced technical risk. However, these solutions have limitations in customization capabilities, constraints on unique differentiation, and a dependence on platform provider roadmaps.

White label AI token solutions have emerged as viable alternatives for businesses seeking faster market entry. These pre-built, customizable platforms allow companies to launch tokenized services under their own brand, avoid lengthy development timelines (launch within weeks), reduce initial capital requirements by 40-60%, access proven security frameworks, and scale infrastructure as user bases grow while maintaining 70-80% of customization capabilities.

The AI token development industry has matured significantly in 2026, with specialized firms offering comprehensive solutions spanning consultation through post-launch optimization. Organizations seeking to hire AI token developers or engage AI tokenization consultation services benefit from evaluating providers across technical expertise, platform specialization, and service comprehensiveness.

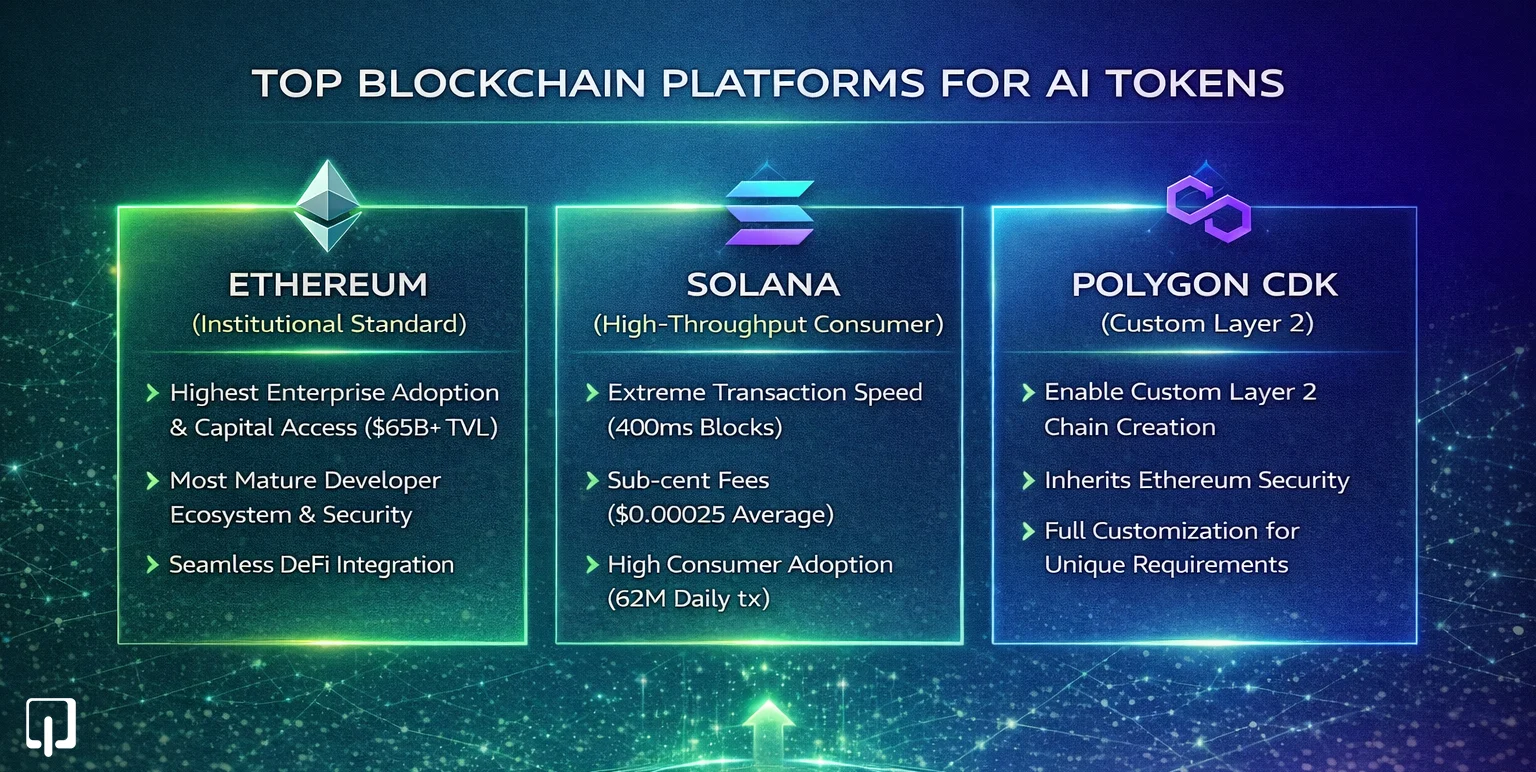

1. Ethereum-based platforms demonstrate highest enterprise adoption, strongest institutional capital access, most mature developer experience, and seamless DeFi integration. Ethereum maintains $65.77 billion Total Value Locked (TVL) as of Q3 2025 and captures 53.8% of stablecoin market share, making it the primary settlement hub for decentralized finance.

2. Solana-based platforms excel in consumer adoption with 62 million daily transactions (50x more than Ethereum), extreme transaction speed (400ms blocks) with sub-cent fees ($0.00025 average), and 4,000+ transactions per second throughput capacity. Solana proves ideal for real-time applications and consumer-facing services, powering successful play-to-earn games processing 2+ million daily token transfers for under $100 total cost.

3. Polygon CDK platforms enable custom Layer 2 chain creation where projects launch independent chains while inheriting Ethereum security. Organizations gain full customization of execution environments and validator sets, optimal for enterprise applications with unique performance requirements while leveraging Ethereum's mature tooling.

AI tokenization delivers transformative advantages for startups and enterprises seeking to scale computational infrastructure and democratize access to high-performance resources. The convergence of blockchain technology and artificial intelligence creates unprecedented opportunities for capital formation, resource allocation, and operational efficiency.

1. Democratized access to capital represents the primary benefit. Traditional financing for GPU-intensive AI operations requires substantial upfront capital from institutional investors. Tokenization enables startups to fractionalize GPU ownership and infrastructure access, enabling access to funding from diverse global investors. This overcomes financial bottlenecks that previously limited infrastructure scaling for emerging companies.

2. Reduced barriers to entry allow smaller players to access high-performance hardware without prohibitive upfront investments. Tokenizing AI resources levels competitive playing fields, enabling innovative startups to compete with well-capitalized enterprises. Organizations can now access cutting-edge computational capabilities through fractional ownership models rather than full infrastructure purchases.

3. Efficient resource allocation emerges through tokenization enabling dynamic, demand-based pricing for infrastructure resources. AI computational power allocates efficiently through market mechanisms, with token-based incentives rewarding participants who contribute unused computational resources to decentralized networks. This creates liquid markets for GPU capacity, data center access, and specialized AI hardware.

4. Improved liquidity materializes as fractional ownership through tokens creates secondary markets where investors buy, sell, and trade infrastructure stakes. This dramatically improves asset liquidity compared to traditional models where infrastructure investments remain illiquid until project completion or exit events.

5. Lower transaction costs result from smart contracts automating revenue distribution and compliance monitoring. Eliminating intermediaries reduces operational costs, directly decreasing capital requirements for scaling AI infrastructure. Automated processes also reduce administrative overhead and accelerate transaction settlement.

Organizations leveraging AI tokenization gain competitive advantages in capital efficiency, operational scalability, and market access. The ability to raise capital globally while maintaining decentralized governance creates sustainable models for long-term growth in computationally intensive industries.

While AI tokens operate on blockchain technology and share characteristics with traditional cryptocurrencies, fundamental differences distinguish these asset classes in purpose, functionality, and value drivers. Understanding these distinctions proves essential for investors, developers, and enterprises evaluating tokenization strategies.

AI tokens function primarily as utility tokens though some implementations include investment characteristics. Value derives from access to valuable AI services or governance rights rather than purely from scarcity or speculative demand. This fundamental orientation toward utility over speculation creates different risk profiles and evaluation frameworks.

Traditional cryptocurrencies like Bitcoin and Ethereum prioritize decentralized currency functionality, with value propositions centered on censorship resistance, programmable money, and trustless transactions. Network effects and adoption as payment mechanisms drive long-term value appreciation.

AI tokens prioritize access and governance rights within specific AI ecosystems. Token holders gain privileges including:

The technical implementation also differs substantially. Cryptocurrencies optimize for security, decentralization, and censorship resistance. AI tokens optimize for efficient resource allocation, governance flexibility, and integration with off-chain AI infrastructure. This necessitates different smart contract architectures, consensus mechanisms, and tokenomics designs.

The convergence of artificial intelligence and blockchain technologies continues accelerating through 2026, with AI tokens positioned at the intersection of multiple transformative trends. Leading forecasts project AI crypto token market dominance driven by decentralized GPU marketplaces, autonomous AI agents powered by blockchain verification, and real-world asset tokenization expansion in enterprise sectors.

Autonomous AI agents represent one of the most significant emerging applications for AI tokens. These agents leverage blockchain for verification, payment settlement, and coordination across distributed networks. Internet Computer's protocol enables on-chain AI execution, allowing developers to build AI applications directly on blockchain infrastructure without relying on centralized cloud providers.

Cortex deploys full deep learning models directly on-chain, allowing smart contracts to call AI models as functions. This enables blockchain applications to leverage AI capabilities natively, potentially replacing static DeFi contracts with AI-powered decentralized applications that adapt to market conditions and user behavior.

Numerai combines predictive AI, token incentives, and real financial markets into an autonomous investment collective. Data scientists worldwide contribute machine learning models trained on encrypted financial data. Numerai's governance token rewards successful model predictions, creating a decentralized approach to quantitative finance.

Investment opportunities in AI tokens demonstrate high-growth potential as real-world AI infrastructure tokenization scales globally. Analysts identify AI crypto tokens among the highest-growth opportunities for 2026, with several projects showing potential for significant value appreciation as distributed AI networks mature and enterprise adoption accelerates.

Governance mechanisms in AI tokens increasingly incorporate sophisticated voting frameworks enabling decentralized decision-making. Token holders use governance tokens to vote on protocol updates, parameter changes, and resource allocation decisions. Smart contracts automate execution of approved proposals, reducing delays and human intervention requirements while maintaining transparency and accountability.

AI tokens serve two distinct functions. In Natural Language Processing, they represent the smallest linguistic units that Large Language Models use to process text, typically equivalent to approximately four characters or three-quarters of a word. In blockchain contexts, AI tokens are digital assets that provide access to AI infrastructure, enable governance voting, and incentivize participation in decentralized AI networks. Unlike regular utility tokens, AI tokens specifically relate to artificial intelligence services, computational resources, or AI-powered governance mechanisms.

Launching an AI token requires six systematic steps: consultation and feasibility analysis to evaluate market viability, token design establishing architecture and economics, smart contract development implementing automated functions, comprehensive testing to identify vulnerabilities, token launch through ICOs or token sales, and post-launch maintenance for ongoing optimization. Organizations can choose custom development (4-6 months, $50,000-$500,000+) for complete control or white-label solutions (2-4 weeks, 40-60% lower cost) for faster market entry.

Ethereum remains the institutional standard with the largest developer ecosystem, $65.77 billion Total Value Locked, and 53.8% stablecoin market share, making it ideal for enterprise applications requiring maximum security and liquidity. Solana excels for high-throughput consumer applications with 62 million daily transactions, 400ms block times, and sub-cent fees ($0.00025 average). Polygon CDK enables custom Layer 2 chain creation for organizations requiring specialized infrastructure while inheriting Ethereum security. Selection depends on specific requirements for transaction volume, customization needs, and target audience.

The optimal tokenization approach involves four systematic steps: digitize and fractionalize physical infrastructure assets (GPUs, data centers) with ownership recorded on blockchain, set compliance rules establishing ownership rights enforced through smart contracts, commercialize tokens by selling to global investors democratizing access to high-value infrastructure, and automate revenue distribution with earnings flowing directly to token holders based on ownership percentages. This framework addresses capital access challenges while maintaining regulatory compliance and operational transparency.

AI tokens increasingly incorporate governance mechanisms enabling decentralized decision-making. Token holders use governance tokens to vote on protocol updates, parameter changes, and resource allocation decisions. Smart contracts automate execution of approved proposals, reducing delays and human intervention. Emerging Decentralized AI Governance Networks (DAGN) use blockchain-based tokenized power control to ensure human-centric AI operation through community-driven development, DAO voting on development priorities, and collective decision-making on token economics adjustments and partnership approvals.

Tokenization provides five critical advantages for scaling AI startups: democratized access to capital by fractionalizing GPU ownership enabling global investor participation, reduced barriers to entry allowing smaller players to access high-performance hardware without prohibitive investments, efficient resource allocation through dynamic demand-based pricing mechanisms, improved liquidity creating secondary markets for infrastructure stakes, and lower transaction costs through smart contract automation eliminating intermediaries. These benefits directly address financial bottlenecks limiting infrastructure scaling.

Six primary tokenization methods serve different applications: word tokenization splits text into individual words for sentiment analysis and classification, character tokenization breaks text into individual characters for spelling correction, subword tokenization divides words into meaningful units handling out-of-vocabulary words in modern LLMs, n-gram tokenization creates contiguous sequences for language modeling, sentence tokenization divides text into sentence units for summarization, and Byte-Pair Encoding iteratively merges frequent character pairs balancing character and word-level approaches for advanced applications.

AI token development costs range from $25,000-$75,000 for basic utility tokens (4-8 weeks) with standard features, $75,000-$200,000 for standard AI tokens (8-16 weeks) with basic customization, $200,000-$500,000 for advanced AI tokens (16-24 weeks) with significant AI integration, and $500,000-$2,000,000 for enterprise-grade solutions (6-12 months) with proprietary AI models. Cost factors include blockchain platform selection, smart contract complexity, AI integration depth, security audit requirements, compliance automation, and post-launch support needs.

AI agent tokens enable autonomous AI agents to operate on blockchain networks with verification, payment settlement, and coordination capabilities. These tokens allow AI agents to execute transactions, participate in governance, and access computational resources without human intervention. Platforms like Internet Computer enable on-chain AI execution where developers build AI applications directly on blockchain infrastructure. Cortex places deep-learning models on-chain allowing smart contracts to call AI models as functions, enabling AI-powered decentralized applications that adapt to market conditions autonomously.

Token standards vary by blockchain platform: Ethereum implements ERC-20 for fungible tokens, ERC-721 for NFTs, and ERC-1155 for hybrid implementations. Binance Smart Chain uses BEP-20 functionally equivalent to ERC-20. Solana employs SPL Token Standard optimized for its architecture. Polygon supports Ethereum standards via compatibility layers. Emerging compliance standards include security token frameworks (ST-20, ERC-1400) for regulated tokens, privacy standards using zero-knowledge proofs for confidential transactions, and sustainability standards implementing proof of impact for ESG-focused tokens.

AI tokens represent a critical convergence point where artificial intelligence infrastructure meets blockchain innovation, fundamentally transforming how computational resources are allocated, financed, and governed. Understanding both dimensions of AI tokens proves essential for organizations seeking competitive advantage in 2026. From NLP fundamentals where tokens enable machines to process language through systematic tokenization methods, to blockchain implementations democratizing access to GPU infrastructure through fractional ownership models, AI tokens are reshaping technology development paradigms.

The market trajectory demonstrates strong growth potential as the global GPU market expands from $65.27 billion in 2024 to projected $274.21 billion by 2029. Organizations equipped with comprehensive knowledge of token mechanics, development processes, and blockchain platform selection position themselves to capitalize on these transformative opportunities. Strategic evaluation of custom versus pre-built development approaches, combined with careful blockchain selection between Ethereum's institutional strength, Solana's throughput capabilities, or Polygon's customization flexibility, determines project success in increasingly competitive markets.

For startups and enterprises considering AI tokenization strategies, the convergence of proven development methodologies, maturing blockchain infrastructure, and escalating AI computational demand creates favorable conditions for innovation and capital formation. The systematic development process from consultation through post-launch optimization, combined with specialized services from leading development companies, reduces technical barriers while accelerating time-to-market. As autonomous AI agents, decentralized governance frameworks, and cross-chain interoperability mature through 2026, AI tokens will continue driving technological evolution across industries requiring high-performance computational infrastructure.

Copyright © 2026 Webmob Software Solutions