February 10, 2026

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

When Bitcoin's price first crossed $1,000 in 2013, few predicted that its underlying technology would soon revolutionize everything from cross-border payments to supply chain finance.

Blockchain introduced a new paradigm. By decentralizing ownership and transaction mechanisms, it has redefined what it means to hold and transfer value. Yet, as transformative as blockchain is, a more profound shift is taking place right now, one that integrates Artificial Intelligence (AI) into the very foundation of asset ownership and management. This breakthrough is known as AI tokenization.

In this blog, we will explore the transformative potential of AI-powered tokenization, its real-world applications, challenges, and the future of this innovation in altering finance and asset management.

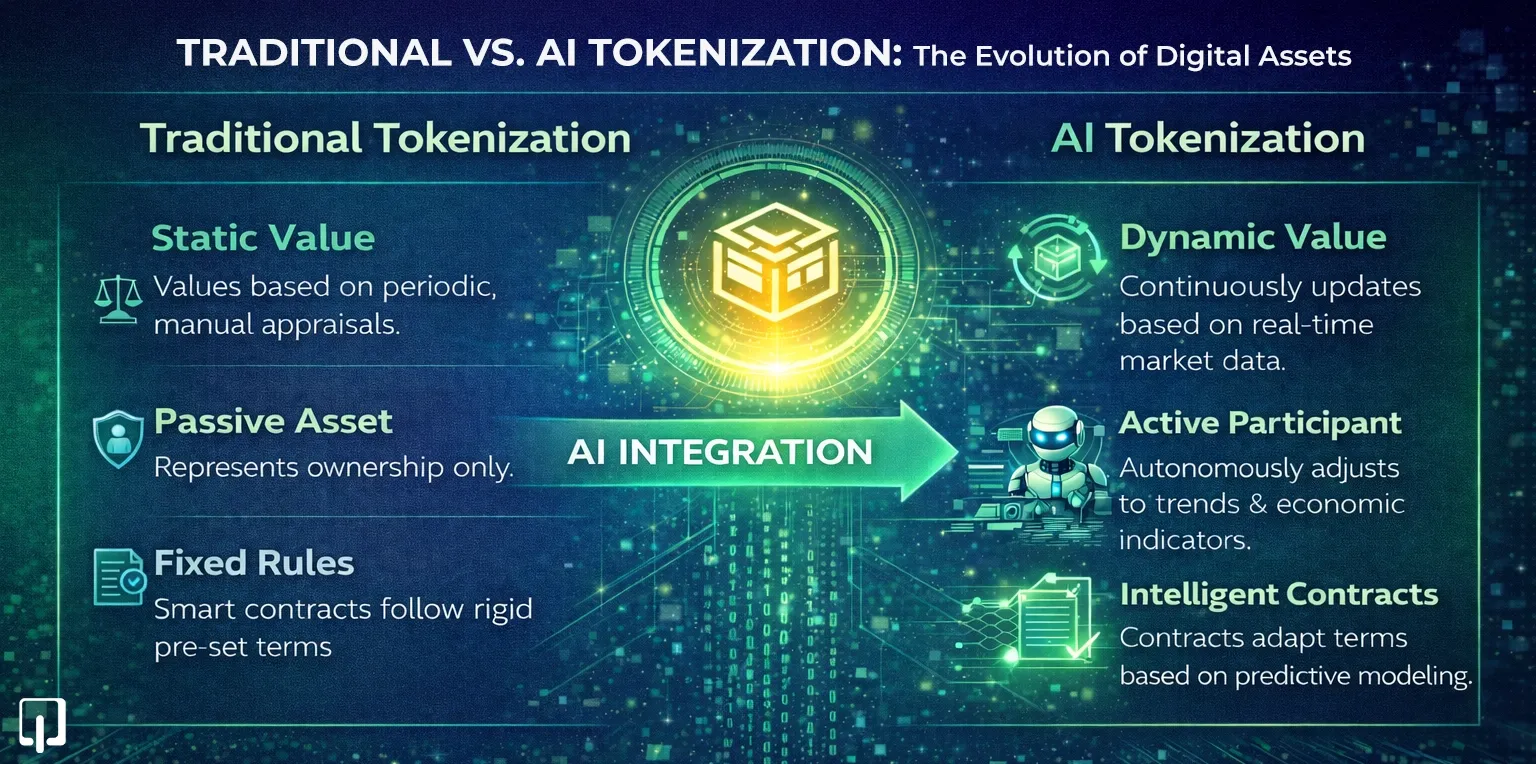

To understand AI tokenization, we first need to define tokenization in a traditional sense. Tokenization is the process of converting real-world assets or digital goods into blockchain-based digital tokens. These tokens represent ownership or value and are governed by smart contracts. This can apply to assets like real estate, stocks, intellectual property, or even works of art.

However, AI tokenization takes this process a step further by incorporating machine learning, predictive modeling, and autonomous decision-making into the token lifecycle.

Traditional tokenization relies on static, predetermined models that assign a fixed value to assets. AI-powered tokenization, on the other hand, continuously adapts and evolves the value and behavior of the tokenized assets by utilizing real-time data, predictive analytics, and AI-based governance.

This means that AI tokens are active participants in the financial ecosystem, adjusting to market trends, investor sentiment, and macroeconomic conditions. For example, imagine a tokenized real estate asset that automatically adjusts its valuation based on shifts in local market demand, economic indicators, or even global geopolitical events. This is the power of AI tokenization.

Traditional tokenization has already made a significant impact by increasing liquidity and access to otherwise illiquid markets, such as real estate and collectibles. However, several challenges remain that AI tokenization is uniquely positioned to address:

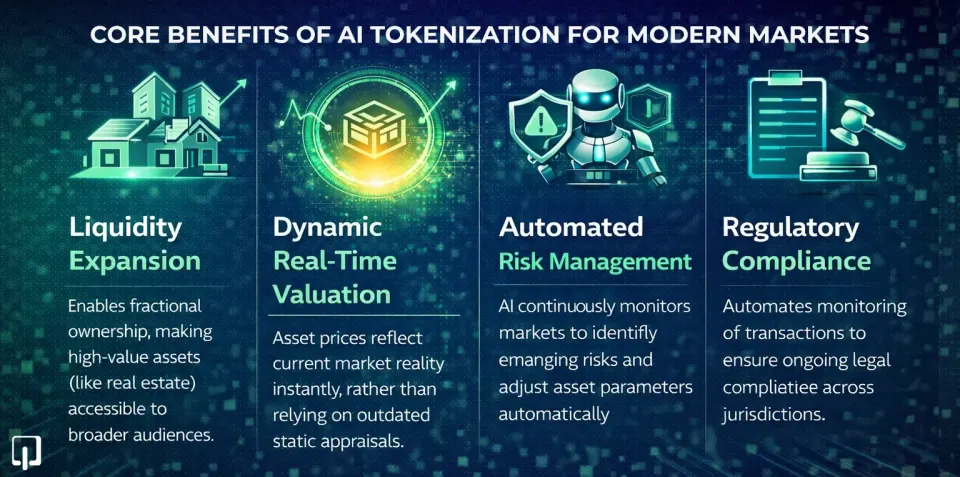

Historically, markets such as real estate or fine art have been considered highly illiquid due to the complexity and high costs of ownership. These assets were traditionally out of reach for smaller investors. AI-powered tokenization solves this problem by enabling fractional ownership.

For example, a tokenized real estate property can be divided into hundreds or thousands of fractional shares, making it affordable and accessible for a broader audience. Furthermore, AI tokenization can continuously track asset performance and adjust liquidity provisions, accordingly, further optimizing market conditions.

One of the limitations of traditional tokenization models is that asset valuations often rely on static data and periodic appraisals. With AI tokenization, asset values are continuously updated in real time based on incoming data feeds such as market sentiment, trading volumes, and economic conditions.

AI models analyze these variables and adjust the asset's value dynamically, providing a more accurate reflection of the asset's current market position.

This is particularly important in sectors like real estate, where asset prices can fluctuate significantly based on economic cycles, demographic trends, and interest rates. With AI-powered tokenization, the token's value is always aligned with current market conditions, reducing valuation errors and improving investor confidence.

Risk management is a cornerstone of any investment strategy, yet traditional methods often rely on historical data and human interpretation. AI tokenization takes this a step further by using machine learning algorithms to continuously monitor assets for potential risks. By analyzing vast amounts of data, including market trends, economic indicators, and investor behavior. AI models can identify emerging risks early and automatically adjust asset holdings, token allocations, or even smart contract terms to mitigate those risks.

For example, an AI tokenized security could automatically reduce its exposure to a volatile market sector or adjust its risk parameters based on predictions about future economic downturns.

Regulatory compliance is a major challenge in the world of tokenized assets. AI tokenization solutions are designed to automate compliance by constantly monitoring transactions and ensuring they meet the legal standards required in different jurisdictions.

AI can analyze data in real-time to ensure that the tokenized assets comply with various regulatory frameworks, reducing the need for manual oversight and minimizing the risk of legal violations.

The first stage of AI tokenization involves gathering a wide range of data relevant to the asset being tokenized. This could include:

This data is then processed and transformed into actionable features for machine learning models. These features might include factors like asset volatility, expected growth, or potential risks. AI tokenization systems continuously ingest new data, ensuring that the asset's valuation and governance models remain up to date.

The AI tokenization process uses machine learning algorithms to develop predictive models that forecast the future value of tokenized assets. These algorithms can assess an asset's current market position and predict how it will perform under different conditions.

For example, tokenized real estate can be dynamically valued based on economic trends, property sales in nearby areas, or changes in consumer behavior. These models adapt and learn over time, improving their accuracy as they process more data.

Traditional smart contracts define the rules and terms for token creation and transactions on the blockchain. With AI tokenization, these smart contracts become much more sophisticated. AI models are integrated into the contract logic, allowing the contract to adjust terms based on changing conditions. For example, a tokenized real estate asset might automatically reduce its transfer fee if market demand for properties in the area drops or increase the fee if demand rises.

In addition to adjusting transaction terms, AI tokenization can also facilitate automated decisions such as dividend distributions, risk mitigation measures, and portfolio rebalancing, all driven by AI models.

Governance of tokenized assets is another area where AI-powered tokenization excels. Traditionally, governance decisions (such as voting on changes to an asset's structure or distribution) are made by humans. With AI tokenization, governance can be optimized through reinforcement learning and predictive models.

These AI models can assess the long-term impact of different governance decisions and suggest the most beneficial actions based on data and past outcomes. AI-driven governance can ensure that decision-making aligns with long-term investment strategies or adjusts based on evolving market conditions.

As the market for AI tokenization solutions grows, numerous platforms and infrastructure providers have emerged to help companies and enterprises build and manage AI tokenized assets.

These platforms offer the tools and frameworks necessary to create, manage, and trade AI-powered tokens. Key features typically include:

Leading platforms enable users to seamlessly integrate AI into their tokenization systems, allowing for continuous improvement and automation throughout the asset lifecycle.

For enterprises looking to implement AI tokenization, working with asset tokenization development companies is crucial. These companies specialize in providing customized solutions that span multiple dimensions. They handle AI model integration tailored to specific asset classes, ensuring the technology aligns with the unique characteristics of real estate, commodities, or financial instruments.

Their expertise extends to smart contract design and deployment, building the technical foundation for token operations. To ensure flexibility across ecosystems, they enable cross-chain interoperability so assets can be traded across different blockchain networks. Additionally, they automate regulatory and compliance processes, streamlining the legal aspects of tokenization that would otherwise require extensive manual oversight.

For large enterprises, AI tokenization solutions offer a comprehensive suite of tools that automate key business processes. These solutions typically focus on areas like:

By integrating AI into these solutions, enterprises can streamline their operations, reduce costs, and offer more dynamic and accessible asset classes to their clients.

One of the most promising use cases for AI-powered tokenization is in real estate. Traditionally, real estate has been a highly illiquid asset class, with expensive entry costs and slow transfer times. By using AI tokenization, real estate assets can be broken down into fractional shares, allowing smaller investors to access properties they wouldn't have been able to afford otherwise.

Real estate tokenization powered by AI also brings dynamic valuation models that adjust based on factors like property demand, interest rates, and local economic conditions. This ensures that the tokenized asset's value reflects the real-time market conditions.

Non-fungible tokens (NFTs) represent unique digital assets like artwork, collectibles, and rare items. AI tokenization plays a significant role in enhancing the valuation and trading of NFTs by analyzing real-time data such as social media sentiment, artist popularity, and market trends.

AI-driven platforms can automatically adjust the price of NFTs based on these factors, ensuring that tokens are always priced in accordance with current market demand.

AI tokenization is also making waves in the agricultural sector, where commodities like crops and livestock are being tokenized. AI models can predict crop yields, assess environmental factors, and adjust token prices in real time. This allows farmers and investors to access fractional ownership in agricultural assets and ensures that token values remain responsive to changing conditions like weather patterns and market demand.

Despite the significant promise of AI tokenization, several challenges remain.

The future of AI tokenization is incredibly exciting. As AI and blockchain technologies continue to mature, we can expect autonomous financial ecosystems where assets exist on a ledger and make decisions based on real-time data and predictions.

Here are some key trends to watch:

AI tokenization represents a fundamental breakthrough in the way we think about digital assets. It is about digitizing ownership and creating assets that are intelligent, dynamic, and responsive to the world around them. Through AI-powered tokenization, industries can improve liquidity, automate decision-making, and create more efficient, transparent markets.

As AI tokenization platforms continue to evolve, businesses that embrace this technology will be at the forefront of a digital revolution, changing how we manage, trade, and value assets in the years to come.

Copyright © 2026 Webmob Software Solutions